Hover over tabs to view Projects!

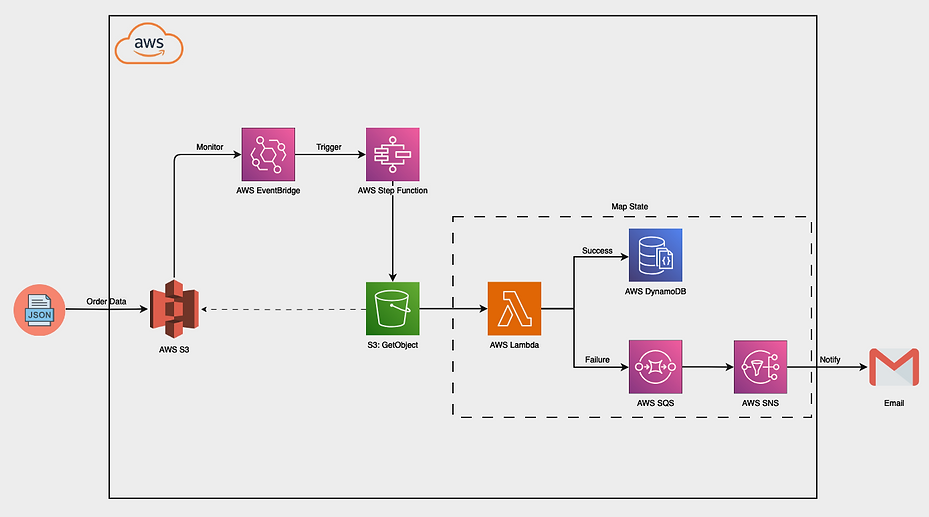

AWS Sales Events Batch

Problem:

-

Sales event data needed to be efficiently processed and stored using various AWS services.

Solution:

-

Data Ingestion: Sales event data is sent to AWS S3 in batches.

-

Monitoring: AWS EventBridge monitors the S3 bucket for new data uploads.

-

Workflow Automation: Upon detection of new data, EventBridge triggers an AWS Step Function to initiate the processing workflow.

-

Data Processing: The Step Function executes an AWS Lambda function using S3 GetObject to process the data.

-

Success Path: On successful data processing, the Lambda function stores the processed data in AWS DynamoDB.

-

Failure Path: If processing fails, the data is sent to AWS SQS, which then triggers AWS SNS to send an email notification to the admin, ensuring timely error handling and notification.

-

System Architecture

Data:

-

JSON nested structure

-

Good Data: contact-info & order-info

-

Bad Data: Only order-info

Video Walkthrough

Watch this video to see the complete implementation of the AWS Sales Event Batch pipeline. It showcases the process of ingesting sales event data into AWS S3, monitoring data with EventBridge, and orchestrating workflows with Step Functions. You'll see how AWS Lambda processes the data, how successful data is sent to DynamoDB, and how failures are managed with SQS and SNS notifications.

Conclusion

This project effectively demonstrates a robust data processing pipeline that ensures reliable and automated handling of sales event data. By integrating AWS S3, EventBridge, Step Functions, Lambda, DynamoDB, SQS, and SNS, this project showcases a comprehensive approach to managing data workflows, from ingestion to error handling. This implementation highlights the power of AWS services in creating scalable and fault-tolerant data solutions, and it underscores my ability to design and execute complex data infrastructure projects.